Welcome to the second edition of the University of Technology Sydney (UTS) Data and AI Ethics Cluster’s newsletter. Please forward it widely to friends and colleagues who may be interested. If you’ve been forwarded the newsletter, you can subscribe at the button below.

Global efforts to regulate AI intensify

The widespread adoption of large language models and generative AI tools this year has led to intensified efforts to regulate AI around the world. US Federal Trade Commission chair Lina Khan penned an op-ed earlier this month, comparing this moment in AI to the rise of the major platforms in the mid-2000s – the Web 2.0 era and its regulatory failures, which led to “concentrating enormous private power over key services and locking in business models that come at extraordinary cost to our privacy and security”. She argued that policymakers are now at another crucial moment of choice: “As the use of AI becomes more widespread, public officials have a responsibility to ensure this hard-learned history doesn’t repeat itself.”

The regulation of AI in the US remains largely voluntary right now, though there has been a recent flurry of new initiatives. The White House’s Office of Science and Technology Policy (OSTP) issued a Blueprint for an AI Bill of Rights last year, a project led by then-OSTP Director Dr Alondra Nelson, who spoke at an event organised by UTS’s Human Technology Institute last September. In January, the US National Institute for Standards and Technology introduced a voluntary AI Risk Management Framework. On 23 May, the Biden administration announced minor additional moves, including a public consultation seeking input on AI risks, human rights and safety. Democratic Senate leader Chuck Schumer revealed last month that he has been working to gather support from Congress for a “high-level framework” on AI that he hopes will result in comprehensive federal legislation.

On the other side of the Atlantic, the European Parliament is in the process of finalising its position on the AI Act (see article below). And in April, the Cyberspace Administration of China proposed draft regulations to govern generative AI outputs, prohibiting companies from training AI systems on copyrighted material and personal data scraped without consent – as well as doubling down on censorship. An earlier regulation that came into force in January requires AI providers to watermark AI-generated content.

The Australian government has remained notably quiet on AI regulation, relying on existing laws and its voluntary framework of eight AI Ethics Principles. This month, Human Rights Commissioner Lorraine Finlay urged the Australian government to act, and wrote that unless it is “prepared to step up and show leadership, we are likely to see the risks to human rights increase exponentially”.

We were all watching on 16 May when a US Senate hearing on AI oversight and governance heard testimony from OpenAI CEO Sam Altman, IBM’s chief privacy officer Christina Montgomery, and Gary Marcus, NYU professor emeritus. Both corporate representatives emphasised their internal ethical standards, and argued that AI governance should primarily be a matter of self-regulation, with only limited, “precision regulation” by government that targeted AI in specific-use cases rather than regulating the technology itself.

In a carefully phrased statement, Altman said OpenAI is “eager to help policymakers as they determine how to facilitate regulation that balances incentivizing safety while ensuring that people are able to access the technology’s benefits”. In response, Marcus pointed out that despite OpenAI’s claims it supported independent audits and independent advance reviews of AI systems, it had so far submitted to neither. “We have to stop letting them set all the rules,” he told senators.

Meanwhile, data privacy regulators have begun investigating ChatGPT for potential breaches. The Italian data privacy regulator temporarily banned ChatGPT, and issued a list of requirements on 11 April requiring OpenAI to comply with EU data protection laws. On 28 April, the regulator lifted the ban, in response to OpenAI enacting limited changes to ChatGPT in the EU, including giving users the right to correct false information about them created by ChatGPT, and opt out of having their personal data used to train AI systems. Alarmingly, it was enough for the regulator that OpenAI said it would enable “data subjects to obtain erasure of information that is considered inaccurate, whilst stating that it is technically impossible, as of now, to rectify inaccuracies”. The mind boggles. Spain, France and Germany also opened investigations into potential privacy breaches by ChatGPT in April, and the European Data Protection Board announced the creation of a task force to “foster cooperation and to exchange information on possible enforcement actions conducted by data protection authorities”.

Earlier this week, in response to the European Parliament vote on its proposed AI Act, Altman threatened to withdraw from the EU if ChatGPT is categorised as “high-risk” and therefore required to comply with higher transparency, accountability, safety and ethical standards. “If we can comply, we will, and if we can’t, we’ll cease operating,” he said. OpenAI doesn’t seem to be taking even weak efforts by governments to regulate them seriously. And it is not alone – tech companies seem to be very good at setting the rules for their own regulation. Have governments learned from such tactics? We’ll soon find out.

By Emma Clancy and Heather Ford, co-coordinators of the UTS Data and AI Ethics Cluster.

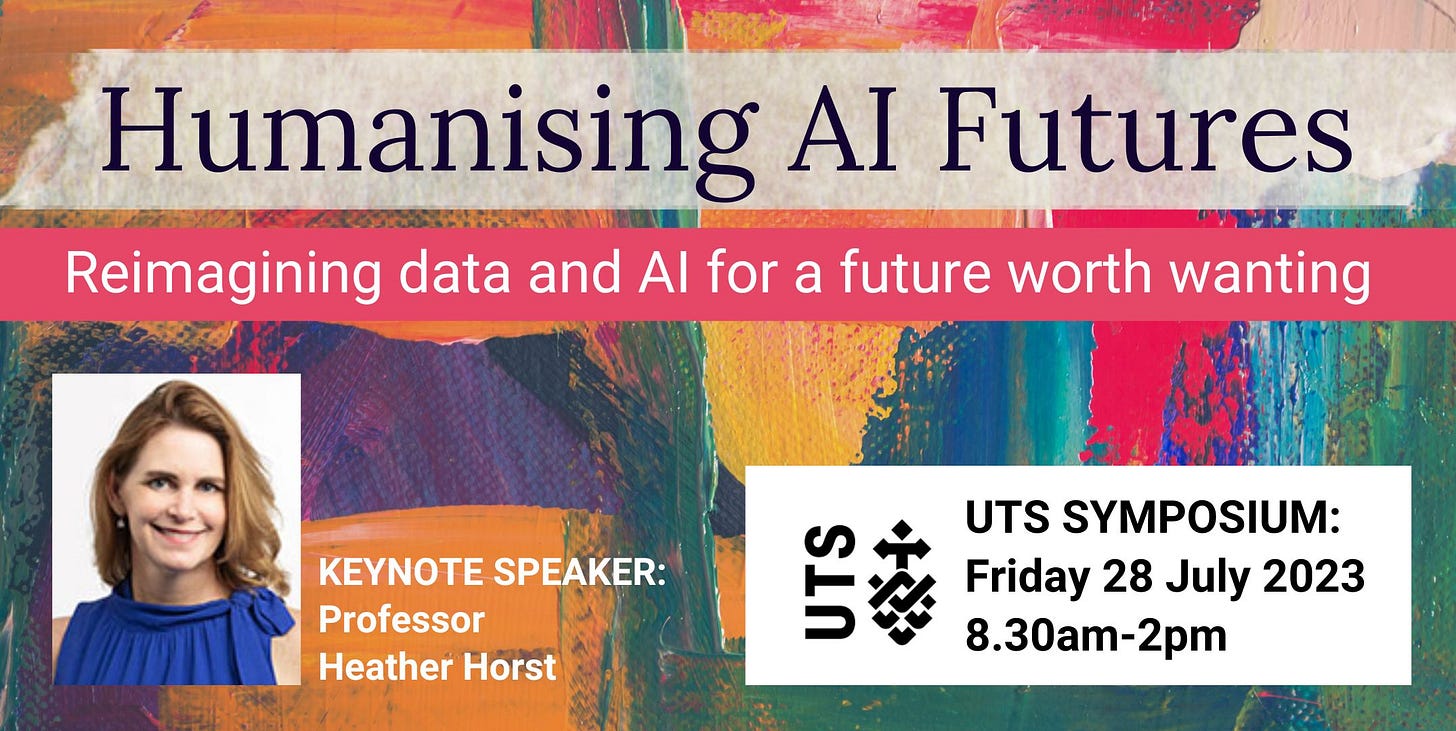

Humanising AI Futures: July symposium at UTS

SAVE THE DATE: Friday 28 July, 2023

In this half-day symposium, we ask: How is data and AI influencing the ways in which we live and relate to one another? How might data and AI be better designed and governed to support cohesive societies? What is standing in the way? Be sure to save the date for the “Humanising AI Futures” symposium dedicated to understanding the future of the human-machine relationship in the context of AI and the role of research in understanding and shaping that future.

The symposium will be hosted by UTS’s Faculty of Arts and Social Sciences, and is organised by the UTS Data and AI Ethics Cluster. Our Deputy Vice-Chancellor and Vice-President (Research), Professor Kate McGrath, will open the conference. Professor Heather Horst, Chief Investigator for the ARC Centre of Excellence for Automated Decision-Making & Society (ADM+S) and Director of the Institute for Culture and Society at Western Sydney University will be the keynote speaker, presenting, “The Human in the Machine: Understanding Context, Creativity, and Collaboration in AI Futures”.

Researchers from nine centres, schools and faculties will present a showcase of research projects that explore how data and AI is being understood, governed and reimagined, followed by presentations by three senior researchers at UTS to articulate the most pressing research questions that need answering. The symposium will conclude with opportunities for others to contribute to a roadmap for UTS research into ethical AI in the coming months.

By Heather Ford.

European Parliament moves closer to banning harmful AI

An important committee vote in the European Parliament on 11 May has called for a ban on the use of real-time biometric surveillance in public spaces, predictive policing, social scoring and other harmful uses of AI systems. The AI Act text adopted by the civil liberties (LIBE) and internal market and consumer protection (IMCO) committees has yet to be endorsed by the full Parliament, but indicates MEPs will take a strong position on human rights in future negotiations with the European Commission and Council of member states.

When the Commission proposed the AI Act in 2021, a prohibition on facial recognition was notably missing. Civil society organisations organised a strong pressure campaign (#ReclaimYourFace), demanding a ban on biometric identification technologies. The Parliament’s largest political group, the centre-right Christian Democrats, opposed a ban, but eventually conceded when it was clear they did not have a majority. As such, advocates for human rights, digital rights and consumer protection have welcomed the outcome.

Despite this big win, there are serious shortcomings in the text. MEPs endorsed the Commission’s overarching risk-based framework, which relies heavily on a self-regulation model. There are four risk categories for AI technologies, each with corresponding governance requirements – “unacceptable risk” (prohibited), “high-risk” (conformity assessments), “limited risk” (transparency obligations), and “low or minimal risk” (no obligations). For high-risk systems, providers will be required to undergo a “conformity assessment” ensuring compliance with transparency, accountability, safety and ethical standards. The Parliament report lauds the virtues of external oversight of high-risk systems, but goes on to say that public authorities lack the technical expertise to establish independent third-party conformity assessments – so companies will be responsible for their own assessment internally for most high-risk systems for the foreseeable future.

Large language models and generative AI systems, not mentioned in the Commission’s original proposal, will not be categorised as high-risk, but the report introduces certain new obligations on providers, such as a requirement to assess and mitigate risks. There is a rather vaguely worded statement that generative AI providers “should ensure transparency about the fact the content is generated by an AI system” but no specific requirements such as watermarking.

The report could yet be amended by MEPs in the full plenary vote of the Parliament on 14 June. Then the Parliament will enter inter-institutional negotiations (“trilogues”) with the Commission and Council, where it will have to fight to retain the progressive content added by MEPs. The ad-hoc “trilogue” process happens behind closed doors, with absolutely no public scrutiny, and has been described as “the place where European democracy goes to die”. As the first attempt to introduce a horizontal regulatory framework for AI internationally, we will be watching the outcome closely.

By Emma Clancy.

Each month, our newsletter will feature an interview with a UTS researcher working in the area of data and AI ethics. This month we spoke to Linda Przhedtsky, Associate Professor at the Human Technology Institute.

Linda Przhedetsky: ‘I was impressed with the diversity of research at UTS’

Why have you chosen UTS to work on your research?

It was an easy decision. I had a rough idea of the topic I wanted to explore for my PhD, and had a chat with potential supervisors at different universities. I spoke with Sacha Molitorisz at UTS, who was engaged and enthusiastic – he could see how the project could take shape, and showed me the diversity of research topics that were being explored within UTS Law. Since I started my PhD in 2020, I have really appreciated that UTS values transdisciplinary approaches, and encourages people to bring their personal and professional experiences into their academic work. This creates a welcoming space for diverse researchers. In 2022, I also started working part-time at UTS! I’ve been with the Human Technology Institute since September last year, and am really excited to be part of a talented team of practitioners, policy professionals, researchers, project managers and subject matter experts who are bringing human-centred values to AI.

What inspired you to focus your research on data and AI ethics?

I was working in consumer policy and ended up doing a considerable amount of work on shaping the development of the Consumer Data Right. I loved thinking about the immense challenges and opportunities that data presented and have been engaged in the field ever since. There are so many complexities to navigate in the ever-changing data policy landscape, I constantly feel like I’m having to push my knowledge and intellect when working in this space. I also owe a huge thank you to Professors Tiberio Caetano from the Gradient Institute and Kimberlee Weatherall from University of Sydney Law School, who saw my interest in AI and encouraged me to pursue this area academically and professionally.

Can you tell us about an interesting project you're working on right now?

My PhD research looks at how data is used by algorithms that screen and sort people who have applied for rental housing. Specifically, I look at the landscape of consumer protections (or lack thereof) that prevent people from experiencing harm resulting from these systems. Recently, I contributed to the CHOICE report, At what cost? The price renters pay to use RentTech – and it was exciting to see these issues getting some media attention. Coming from an advocacy background, I’m really passionate about making sure that my research has a meaningful impact, and moments like these make me really excited about using my work to create change.

Another project that I’m excited about is a book chapter that I worked on with Dr Zofia Bednarz at University of Sydney. It’s called ‘AI Opacity in the Financial Industry and How to Break It’, which will be published in Money, Power and AI via Cambridge University Press. It’s my first book chapter, and it’s part of a really exciting collection!

Our work

‘Uncertainty: Nothing is More Certain’: Director of the UTS Human Technology Institute, Professor Sally Cripps, delivered a public lecture this month exploring the difficulties of making decisions with limited information, and how AI systems aid in decision making. Listen here.

Conferences and events

Byron Writers’ Festival: Dr Suneel Jethani (Digital and Social Media) will be speaking on two panels at the Byron Writers’ Festival on 12 August – ‘Invisible Strings – Data and power at work in our everyday world’ at 11am with Anthony Lowenstein and Paul Barclay, and ‘The Ethics of AI’ with Grace Chan and Tracey Spicer, chaired by Julianne Schultz.

ADM+S Symposium: The ARC Centre of Excellence for Automated Decision-Making and Society (ADM+S) 2023 Symposium, themed ‘Automated News and Media’ is happening at the University of Sydney’s Camperdown campus on 13-14 July 2023. Read the program here.

What we’re reading

High Risk Hustling: Payment Processors, Sexual Proxies, and Discrimination by Design

Authors: Zahra Stardust, Danielle Blunt, Gabriella Garcia, Lorelei Lee, Kate D'Adamo & Rachel Kuo (2023).

Published by: CUNY Law Review

Towards platform observability

Authors: Bernhard Rieder and Jeanette Hofmann (2020)

Published by: Internet Policy Review

Other news

Human Technology Skills Lab: The UTS Human Technology Institute (HTI) has established the Human Technology Skills Lab to build Australia's capability in strategic skills associated with AI and other new technology, by building skills in procurement, implementation and oversight of AI. As part of the Human Technology Skills Lab, HTI offers Strategic AI Courses for the legal sector. Find out more here.

What is the UTS Data and AI Ethics Cluster?

The University of Technology (UTS) Data and AI Ethics Cluster is a network of researchers at UTS who conduct research into the social and ethical implications of data and AI technologies and who rethink how AI might be reimagined to support human flourishing. Researchers in the UTS Data and AI Ethics cluster work to understand how and why people create, use, and abuse data and AI technologies in the ways that they do. We work with governments and organisations to think through modes of regulating such technologies that respect no borders and defy previous principles of governance. We work across disciplines to question, investigate and celebrate such technologies creatively. We explore what it means to be a public university of technology, committed as we are to the university as a place for reimagining what data and AI technologies might do and for whom they might do it. We focus not on our disciplinary differences but on what principles and practices we share.

The 2023 Coordinators are Dr Heather Ford, Associate Professor, School of Communications, and Emma Clancy, PhD Candidate, School of Communications.